TFS & Go: April update - no more Chef (for now)

Sooo, we dropped Chef from our lineup. There were a number of good reasons to do so, such as:

Sorry that this is so long and broken up...let me know if you want more info.

Here's what we're using to control Application Pools - and the reason we're doing this is that we ran into issues with files being locked during deploy time (we narrowed it down to the AppPool).

That is our module coming into play...here's what that does...

- To properly get the most out of Chef in a Windows environment, you really need DSC (desired state configuration). We are not using DSC at this time (on the to-do list).

- We were starting to twist it into doing deployment tasks - this is not appropriate (such as 'stop the AppPool, deploy, start the AppPool').

We found a way to do the same thing via (easy?) Powershell scripts, and modules! Yes, we have really started having fun. Not only that, but all of this is in TFS and part of our deployment chain.

Another big step is moving all of our pipelines into templates, and introducing a development process around new template features/fixes. This means that keeping track of pipelines is really easy for us, and much easier for the devs to deal with from a requirements standpoint.

Further - we've gotten everyone to agree to a naming convention so our template model works, plus they are now mandated to include at least a basic Nunit test - the default WebApp templates build and deploy the App plus tests!

Templating looks like this...

- AppName-Build

- Build the App, export build artifact

- Build the Tests, export build artifact

- AppName-QA

- Deploy the App using our IIS functions module

- Deploy/Execute the Tests, export test artifact

- AppName-UAT

- Same as QA, same into Prod

Publicizing the process

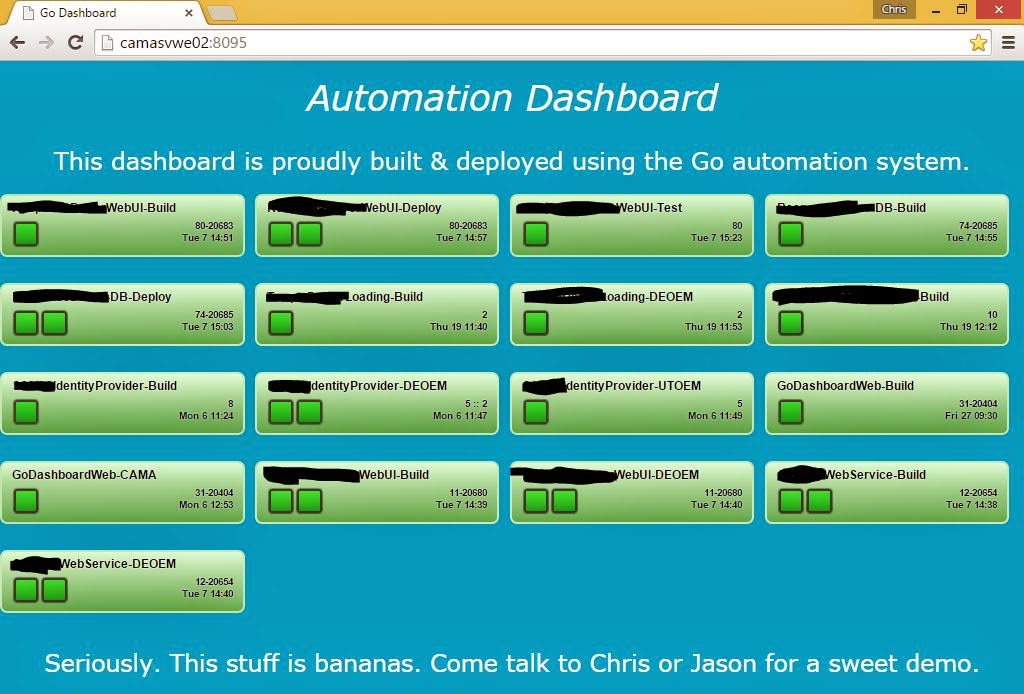

And we publicize the progress/status of all this using a custom version of this: https://github.com/LateRoomsGroup/GoDashboard

We have it as part of our Ops dashboard cycle, plus a dedicated TV outside of the CTO's office (near the dev team) displays this.

It looks like this: (so handy that right now everything is green!)

Long Powershell scripts follow...

Sorry that this is so long and broken up...let me know if you want more info.

Here's what we're using to control Application Pools - and the reason we're doing this is that we ran into issues with files being locked during deploy time (we narrowed it down to the AppPool).

Stop-RemoteAppPool $projectName $targetServer

That is our module coming into play...here's what that does...

# Wrapper function for Set-RemoteAppPoolAnd 'Set-RemoteAppPool'...(is a long one - the 'Add-CustomModule' piece just does a 'load if not loaded' kind of thing)

function Stop-RemoteAppPool {

param(

[Parameter(Position=1)][string]$appPoolName = $(Throw "Required parameter missing: appPoolName"),

[Parameter(Position=2)][string]$serverName = $(Throw "Required parameter missing: serverName")

)

Set-RemoteAppPool $appPoolName $serverName Stop

}

# This is the main piece of the modulefunction Set-RemoteAppPool {param([Parameter(Position=1)][string]$appPoolName = $(Throw "Required parameter missing: appPoolName"),[Parameter(Position=2)][string]$serverName = $(Throw "Required parameter missing: serverName"),[Parameter(Position=3)][string]$action = $(Throw "Required parameter missing: action"))# Ensure the WebAdministration module is loadedImport-Module $PSScriptRoot/moduleFunctions.psm1$exportFunctions = "function Add-CustomModule { ${function:Add-CustomModule} }"######################################################################################################## We are about to enter the remote system...Invoke-Command -ArgumentList $appPoolName,$exportFunctions,$action -ComputerName $serverName -ScriptBlock {# Create the Functions (cmdlets) on the remote systemparam($appPoolName,$importFunctions,$action). ([ScriptBlock]::Create($importFunctions))# Ensure the WebAdministration module gets loaded on the remote systemAdd-CustomModule WebAdministration# Get the current state of the target appPool$appPoolState = (Get-WebAppPoolState $appPoolName).Value# Action the appPool based on parameter#3 (start)if ($action -eq 'Start') {# Action:Start - Start the AppPool from a stopped stateif ($appPoolState -eq 'Stopped') {Start-WebAppPool $appPoolName# 3 seconds should be tons of time for an AppPool to start from a stopped state# while still being relatively short from an automation cycle time perspectiveStart-Sleep -s 3if ((Get-WebAppPoolState $appPoolName).Value -eq 'Started') {Write-Host "AppPool $appPoolName successfully started."}else {# It could be in a starting or unknown state...since we're failing out# here, let's output the current state of the appPool for debug purposes.$currentAppPoolState = (Get-WebAppPoolState $appPoolName).ValueThrow "AppPool $appPoolName failed to start! `r`nIt's current state is $currentAppPoolState."}}}# Action the appPool based on parameter#3 (stop)elseif ($action -eq 'Stop') {# Action:Stop - Stop the AppPool from a started stateif ($appPoolState -eq 'Started') {Stop-WebAppPool $appPoolName# 2 seconds should be plenty of time for an AppPool to enter a stopped stateStart-Sleep -s 2if ((Get-WebAppPoolState $appPoolName).Value -eq 'Stopped') {Write-Host "AppPool $appPoolName successfully stopped."}else {# Wait 3 more seconds - longer than this and it's broken# Perhaps there could be lots of production connections, so let's# allow for that - an additional 3 seconds.Start-Sleep -s 3if ((Get-WebAppPoolState $appPoolName).Value -eq 'Stopped') {Write-Host "AppPool $appPoolName successfully stopped."}else {# It could be in a stopping or unknown state...since we're failing out# here, let's output the current state of the appPool for debug purposes.$currentAppPoolState = (Get-WebAppPoolState $appPoolName).ValueThrow "AppPool $appPoolName failed to stop! `r`nIt's current state is $currentAppPoolState."}}}}} # End ScriptBlock - exiting the remote system...#######################################################################################################

Comments

Post a Comment